AI Research

Are you curious about the rapidly growing field of AI research and computing? Artificial Intelligence (AI) is a technology that focuses on developing intelligent machinations on streaming services;

AI has become an integral part of our lives, from self-driving cars to personalized recommendations on streaming services, with reinforcement learning algorithms constantly improving over the internet.

Google is one of the leading companies in AI research, using machine learning algorithms to analyze vast amounts of data and identify patterns and insights.

To explore this field further, Google collaborates with Stanford University. How do you conduct AI research?

There are several key factors to consider when studying AI as a research area. First, it is crucial to understand what AI is and how it relates to machine learning systems.

Another critical aspect of conducting AI research is knowing how to use computing and computer for research purposes. This can involve using machine learning algorithms to analyze data sets or creating custom models for specific applications.

Visualization can also be utilized to understand the results of AI analysis better.

Knowing how to write an influential AI research paper is essential. This involves following standard academic writing conventions while presenting complex technical information clearly and concisely.

Additionally, it is necessary to include visualization of machine learning systems to better convey the idea behind your research.

Ultimately, the approach to conducting successful AI research involves staying up-to-date with current trends and developments in the field while also being able to think critically about potential applications for the technology.

Keeping an eye on machine learning systems and exploring new ideas for reinforcement learning can also be helpful, as well as utilizing visualization techniques to understand complex data better.

So whether you’re looking to develop suitable AI applications, read informative papers, or learn more about this exciting field, there’s never been a better time to get involved in AI research!

And with the end in sight for many of the challenges in AI development, now is the perfect time to jump in and explore the possibilities.

Key Takeaways

AI research has evolved significantly since its inception in the 1950s, with current trends including explainable AI, autonomous systems, and quantum computing.

Machine learning and deep learning techniques are transforming the finance, healthcare, and manufacturing industries by enabling computers to learn from large datasets and perform complex tasks like fraud detection or medical imaging analysis.

Natural language processing (NLP) is a crucial aspect of AI research to understand human language. NLP is used in virtual assistants like Siri or Alexa applications and sentiment analysis tools for social media monitoring.

Robotics and automation powered by AI are increasingly prevalent across various industries. But also raise concerns regarding job displacement. Computer Vision is another exciting field in AI research that focuses on enabling machines to interpret visual information from the world around them, leading to improved diagnostic tools for medical imaging, among other things.

Evolution Of AI Research

AI research has come a long way since its origins in the 1950s, and today’s innovations are impacting society and everyday life in unique ways.

Origins And Early Developments Of AI

The inception of AI research can be traced back to the mid-20th century when mathematician and computer scientist Alan Turing developed the foundational concepts for artificial intelligence.

In 1950, he proposed a test, now known as the Turing Test, which aimed to determine if a machine could exhibit intelligent behavior indistinguishable from a human’s.

During the 1950s and 1960s, early researchers like Marvin Minsky and John McCarthy laid crucial groundwork for future advancements in AI.

Minsky’s development of neural networks allowed machines to simulate basic learning processes found in biological systems.

Meanwhile, McCarthy coined the term “artificial intelligence” and introduced Lisp programming language—an essential tool for many fledgling AI projects.

As interest in AI grew throughout academia during these early years, government agencies began funding key projects with ambitious goals—such as translating spoken languages or mimicking aspects of human cognition—to advance cognitive computing capabilities.

One notable success story from this era is ELIZA—a program created by Joseph Weizenbaum that simulated conversation by recognizing patterns within user inputs and generating appropriate responses based on simple scripts.

Impact Of AI On Society And Everyday Life

AI has already profoundly impacted society and everyday life, with its influence only expected to increase in the coming years.

One of the most significant impacts is AI’s ability to automate tasks previously performed by humans, leading to greater efficiency and cost savings.

AI analyzes vast amounts of data in healthcare to improve diagnoses and treatments. Additionally, AI-powered robots are revolutionizing surgery by carrying out intricate procedures with greater precision than human hands alone could achieve.

However, there are concerns over job displacement as automation becomes more widespread. A study by McKinsey found that up to 800 million jobs could be displaced globally due to automation by 2030.

Current Trends And Innovations In AI Research

AI research constantly evolves, with new trends and innovations emerging each year. Here are some of the current trends and developments in AI research:

Explainable AI: With the increasing complexity of AI models, there is a growing need to understand how they work to facilitate trust and accountability. This trend aims to make AI more transparent by clearly explaining its predictions and decisions.

Autonomous systems: Autonomous systems are becoming increasingly prevalent in various industries, such as transportation, manufacturing, and healthcare. These systems can make decisions and take actions independently without human intervention.

Edge computing: As the demand for real-time processing increases, edge computing is becoming more popular in AI research. By moving processing closer to where the data is generated, edge computing reduces latency and improves response times.

Federated learning: This method allows multiple parties to train a shared machine learning model without sharing their data. It has potential applications in healthcare, finance, and other industries where privacy concerns are paramount.

Generative models: Generative models can create new content, such as images, text, or music, based on patterns learned from existing data. They have been used in various creative applications, including art, fashion design, and music composition.

Reinforcement learning: Reinforcement learning allows an agent to learn by interacting with an environment through trial and error until it achieves a desired outcome. It has shown promise in robotics, gaming, and autonomous driving.

Quantum computing: With its ability to solve complex problems much faster than classical computers could ever hope to achieve, quantum computing has enormous potential for AI research – particularly in areas such as optimization problems and machine learning algorithms.

In summary, these trends show that AI research continues to push boundaries with cutting-edge technologies that have wide-ranging implications for society – from advancing scientific discovery to transforming how we work and live.

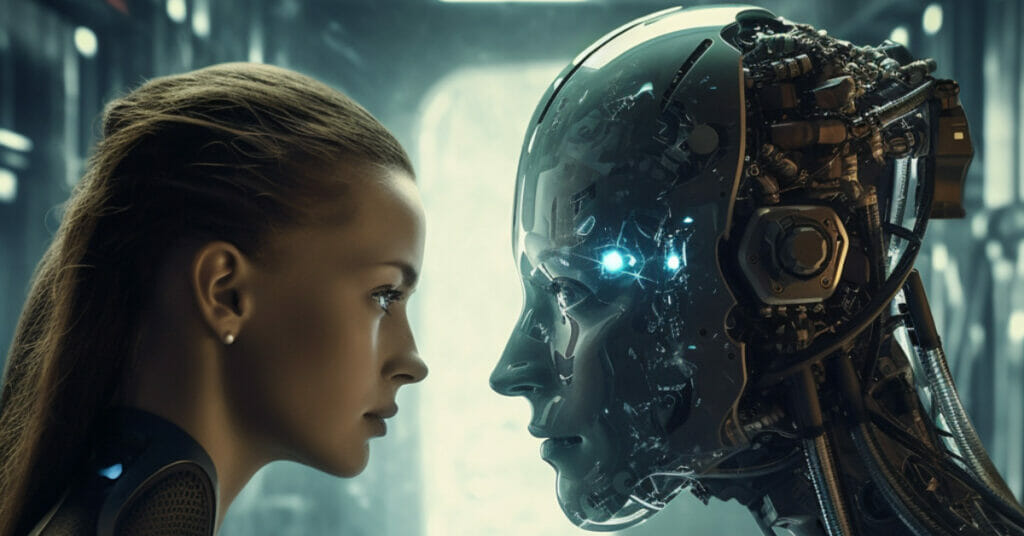

The Difference between Artificial Intelligence and Human Intelligence

What is Artificial Intelligence?

Artificial intelligence (AI) uses computing methods to solve problems that require human intelligence. AI systems are designed to learn from data, recognize patterns, and make good decisions based on that information. They can work faster and more consistently than humans, making them valuable in various industries. AI papers can provide insights into how these systems work.

What is Human Intelligence?

Human intelligence, which is natural and innate, has been extensively studied in papers and research. It allows people to understand complex concepts accurately, think critically, and solve problems creatively. AI applications strive to achieve human-like intelligence, unlike humans, who can understand natural language and context without specific programming.

How Do They Differ?

The main difference between artificial intelligence and human intelligence lies in their approach to problem-solving.

AI likely has insight experienced AI researchers gained to make decisions, while humans use intuition, creativity, and reasoning skills to arrive at a solution.

However, recent papers suggest that AI is advancing rapidly and may soon be able to mimic human intelligence more closely.

While AI can work quickly and efficiently within its programmed parameters, it lacks the flexibility of human intelligence when presented with new or unexpected situations.

Humans have the capacity for creativity and innovation that machines cannot replicate. However, recent papers suggest that AI is advancing rapidly and may soon be able to adapt to new situations and humans.

AI outperforms human intelligence. Machines can process vast amounts of data faster than humans, making them useful in industries such as finance or healthcare.

AI can analyze and process papers faster, making it an invaluable tool for research and academic purposes.

However, one limitation of AI papers is their inability to interpret language outside its pre-programmed parameters accurately.

Humans can understand sarcasm or irony in conversation because they have an innate understanding of social cues that machines lack.

Advancements In AI Research

Machine learning and deep learning techniques have revolutionized AI research by enabling computers to learn from large datasets and make predictions or decisions without explicit instructions.

Machine Learning And Deep Learning Techniques

Machine learning and deep learning are two of AI research’s most exciting and rapidly evolving branches.

Machine learning involves teaching machines to learn independently by feeding them large amounts of data, allowing them to identify patterns and make predictions based on that information.

One example where machine learning has been successfully applied is fraud detection, with algorithms trained to recognize fraudulent activities in financial transactions.

In healthcare, deep learning algorithms have been used for medical imaging analysis. They detect abnormalities with high accuracy rates.

The advancements in these techniques are transforming industries across various sectors, including finance, healthcare, and manufacturing, among others, opening up new opportunities while reducing costs if appropriately executed.

Natural Language Processing And Understanding

Natural Language Processing (NLP) is the branch of AI that deals with how computers can interpret human language.

It involves analyzing and understanding human speech or text, which can be incredibly challenging given the complexity and nuances of language.

Today, NLP is used in various applications such as virtual assistants like Siri and Alexa, chatbots, translation software, sentiment analysis tools for social media monitoring, and more.

For example, Google Translate uses NLP techniques to automatically translate written text from one language to another while retaining its original meaning.

Another example is IBM’s Watson Assistant, which uses natural language processing techniques to enable chatbots to converse with users naturally.

However, there are still some challenges in this field regarding understanding different accents or dialects accurately or recognizing sarcasm or humor correctly.

Robotics And Automation

A significant part of AI research is focused on robotics and automation.

One example of a successful implementation of robotics and automation is Amazon’s fulfillment center, which uses Kiva robots to move inventory across the warehouse floor.

These robots have increased efficiency by 50%, enabling employees to focus on more critical tasks such as customer service.

However, there are concerns about job displacement due to the widespread deployment of these automated systems; some estimates suggest that over 20 million jobs could be lost from advances in this field within a decade.

Computer Vision

Computer Vision is an exciting field in AI research that focuses on enabling machines to interpret and understand visual information from the world around them.

This field has helped various applications, such as facial recognition, object detection, autonomous driving, and even restoring old photographs.

Thanks to advancements in computer vision algorithms and machine learning techniques like deep neural networks, computers can now efficiently perform complex tasks such as identifying objects within images or detecting patterns in large data sets.

Ethical Considerations In AI Research

Ethical dilemmas in AI research include bias and discrimination, privacy and security concerns, job displacement, and economic effects.

Bias And Discrimination

One of AI research’s most significant ethical concerns is bias and discrimination. Artificial intelligence systems are only as unbiased as the data they are trained on.

The AI will perpetuate those biases if that data is skewed or discriminatory.

For example, facial recognition technology has been shown to have higher error rates for people with darker skin tones and women than for white men.

To combat bias and discrimination in AI, researchers must ensure that their training datasets are diverse and representative of all groups within society.

Additionally, developers should consider implementing auditing processes to identify any potential biases within their algorithms.

According to a recent study by McKinsey & Company, addressing bias in AI could generate up to $4 trillion in additional revenue annually across different economic sectors globally.

Privacy And Security Concerns

As AI advances, concerns have been raised regarding its impact on privacy and security.

With AI’s ability to collect and analyze large amounts of data, there is a risk that this information could be used in ways that threaten individuals’ privacy.

There is also the issue of cybersecurity threats posed by AI-powered systems.

As these systems become more complex and interconnected with other devices and networks, they become increasingly vulnerable to cyber attacks such as hacking or malware infections.

To address these issues, researchers are developing encryption techniques that can protect sensitive data from unauthorized access while still allowing it to be analyzed by machines.

Businesses and individuals must be aware of the potential risks associated with AI technology and take necessary precautions accordingly.

Job Displacement And Economic Effects

One of AI research’s biggest concerns is its potential to replace human workers.

The fear is that as AI technology advances and becomes more efficient, it could take over jobs traditionally performed by humans, leading to widespread job displacement.

For example, increased automation through AI could decrease employment opportunities for low-skilled workers in manufacturing industries that rely heavily on manual labor.

However, it’s not all doom and gloom. Many experts argue that while some jobs may be lost due to AI technologies, new job opportunities will also arise.

While discussions around the economic effects of AI continue to be controversial, one thing is sure: we must prepare for changes in our workforces brought about by advancing technology to ensure everyone benefits equitably from these innovations.

Ethical Dilemmas And Regulations

As AI research advances, ethical concerns and regulations are becoming increasingly important. One major ethical dilemma is the potential for bias and discrimination in AI algorithms, which can reflect and amplify existing societal biases.

For example, facial recognition technology has been criticized for racially biased results.

Job displacement due to automation is a significant economic effect of AI adoption. As more tasks become automated, many workers may lose their jobs or need to adapt to new roles requiring different skills.

Various regulations have been proposed or implemented worldwide to address these issues.

We must continue to grapple with these ethical challenges as we push forward with AI innovation.

Future Of AI Research

Research in AI is expected to explore potential applications and integration with emerging technologies, emphasizing continued development while ensuring AI’s ethical and responsible use.

Potential Applications And Integration With Emerging Technologies

As AI research continues to evolve, new applications for artificial intelligence are emerging and integrating with other cutting-edge technologies. Here are some potential applications:

AI-powered drones, robots, and autonomous vehicles could revolutionize the transportation industry.

AI can be integrated with blockchain technology for enhanced security and transparency in industries such as finance and healthcare.

Combining AI and augmented reality (AR) could enable more immersive experiences in fields like education and entertainment.

AI can be used for personalized medicine, analyzing individual patient data to tailor treatments and diagnoses.

Integrating AI with the Internet of Things (IoT) can enhance automation capabilities in smart homes, cities, and manufacturing processes.

With advances in natural language processing (NLP), chatbots and virtual assistants could become even more human-like in their interactions.

Integration of machine learning algorithms with big data analytics can aid in predicting customer behavior patterns for targeted marketing strategies.

AI-based precision farming techniques can optimize crop yields while minimizing environmental impact in the agriculture industry.

These potential applications highlight the vast possibilities for the future of AI research as it continues to integrate with emerging technologies across various industries.

Investment And Funding Trends

The demand for AI research and development continues to grow, resulting in increased investment and funding. In 2022 the global AI market is expected to reach $119.78 billion and rapidly improve.

In fact, according to a study by Accenture, investing in artificial intelligence could double economic growth rates by 2035.

This bold prediction highlights why businesses across various industries adopt intelligent systems that leverage data mining algorithms, pattern recognition software, or deep learning techniques such as neural networks.

Importance Of Continued Research And Development

Continued research and development in AI is crucial to unlock the full potential of this transformative technology.

There is still much more to be discovered, developed, and refined in all areas of AI research, including machine learning techniques, natural language processing and understanding, robotics and automation, computer vision, cognitive computing, data mining, and pattern recognition.

Moreover, continued research can help address ethical concerns surrounding AI’s impact on society, such as bias and discrimination prevention measures.

It could also mitigate job displacement effects or privacy/security concerns, ensuring responsible use of the technology for a brighter future.

Ensuring Ethical And Responsible Use Of AI

As AI advances and becomes more integrated into our daily lives, we must ensure its ethical and responsible use.

One primary concern is the potential for bias and discrimination in AI algorithms, which can lead to unfair treatment of certain groups.

Privacy and security are also significant considerations when it comes to AI.

As AI collects immense amounts of data about individuals, strict regulations must be in place to protect this information from misuse or hacking attempts.

To address these issues, governments worldwide are beginning to implement regulations on developing and deploying AI systems.

Companies that integrate AI into their operations must also take responsibility for ensuring their algorithms are transparent, unbiased, and secure.

Insights and Lessons Learned from Experienced AI Researchers

The Importance of Clear Instructions and Well-Prepared Data Sets in Developing Effective Machine Learning Code

One critical insight experienced AI researchers gained is the importance of clear instructions and well-prepared data sets in developing effective machine learning code.

Machine learning models rely heavily on data, which must be appropriately labeled, formatted, and organized to ensure accurate results.

With clear instructions and well-prepared data sets, machine learning algorithms used in AI applications can become clear and produce accurate results.

This can lead to wasted time and resources and potential errors that could impact the entire system’s performance.

To address this issue, experienced AI researchers have developed best practices for preparing data sets and providing clear instructions to machine learning algorithms.

These practices include using standardized formats for labeling data, creating detailed documentation for each process step, and conducting thorough testing to ensure accuracy.

Exploring Various Methods and Applications of Machine PIn Finance, receptions, Reinforcement, and Deep Learning

Experienced AI researchers have also explored various methods and applications of machine perception, reinforcement learning, and deep learning to improve machine learning models.

These techniques involve training machines to recognize patterns in extensive data sets through trial-and-error processes.

Machine perception involves teaching machines to recognize objects or patterns in visual or auditory input.

This technique has been used in various applications, from self-driving cars to voice recognition software.

Reinforcement learning involves training machines through positive feedback (rewards) or negative feedback (payments) to their actions.

This technique has been used in applications such as game-playing algorithms.

Deep learning involves using neural networks with multiple layers to analyze complex patterns in large data sets.

This technique has been used in applications such as image recognition software.

By exploring these various methods and applications of machine perception, reinforcement learning, and deep learning, experienced AI researchers have gained valuable insights into how these techniques can be applied across various industries and applications.

Discovering New Ideas and Uses for AI Technology

Through their work, experienced AI researchers have discovered new ideas and use for AI technology that continues to expand its potential impact on various industries.

For example, AI has been used in healthcare to improve patient outcomes by analyzing medical data and predicting disease progression.

In finance, AI has also been used to detect fraudulent transactions and prevent financial crimes. AI has been used in manufacturing to optimize production processes and reduce waste.

As AI research evolves, experienced researchers will discover new ways to apply this technology across various industries.

By sharing their insights and lessons learned with others in the field, they can help ensure that these advances are used ethically and responsibly for all benefits.

Reading Habits for AI Researchers: Conversations, Videos, Papers, and Conference Talks

The Importance of Reading Papers and Journals

Reading papers and journals is essential to stay up-to-date on the latest developments in AI applications. Dedicating significant time to reading these materials is necessary as they provide valuable insights into new AI tasks and challenges.

To make the most out of your reading time, it’s crucial to be selective in choosing which papers and journals to read. Look for reputable sources that have been peer-reviewed or published by well-known organizations.

Consider seeking out documents that are relevant to your specific area of research.

It’s also helpful to take notes while reading. This can help you retain information better and connect with different ideas presented in the material.

Consider discussing the paper with other researchers or colleagues who may have different perspectives on the topic.

The Value of Conversations with Other Researchers

In addition to reading papers and journals, conversations with other researchers can provide valuable insights into new tasks and challenges in AI research.

By discussing ideas with others in the field, you can gain new perspectives on problems you’re working on and potentially discover new areas for exploration.

To maximize the value of these conversations, seek out individuals with expertise in areas related to your research interests.

Consider attending networking events or contacting colleagues at other institutions with similar interests.

Be bold and ask questions during these conversations – even if they seem primary or elementary.

Everyone has a unique perspective on a problem, so even simple questions can lead to valuable insights.

The Benefits of Watching Videos

Watching videos is another way for AI researchers to improve their knowledge base. In particular, conference talks can be an excellent resource for staying up-to-date on the latest developments in the field.

When selecting which videos to watch, consider seeking talks relevant to your specific area of research.

Look for videos presented at reputable conferences or by well-known organizations.

While watching these videos, take notes on key ideas or concepts presented.

Consider discussing the video with other researchers or colleagues who may have different perspectives on the topic.

The Value of Attending Conferences and Listening to Talks

Attending conferences and listening to talks is an excellent way for AI researchers to stay up-to-date on the latest developments in the field.

These events provide an opportunity to learn from field experts, network with other researchers, and discover new areas for exploration.

When selecting which conferences or talks to attend, consider seeking events relevant to your specific area of research.

Look for events that feature speakers who are respected experts in their fields.

During these events, take notes on key ideas or concepts presented.

Consider discussing what you’ve learned with other researchers or colleagues who may have different perspectives on the topic.

Mental and Physical Health: Prerequisites for AI Research Motivations

The Importance of Mental and Physical Health in AI Research

Motivation is critical. Without cause, researchers may struggle to stay focused on their work and may not produce the best results possible.

However, reason alone is not enough. Researchers must also have robust mental and physical health to be successful in this field.

The ability to handle stress and maintain focus is essential for AI researchers. This is because the work can be incredibly mentally and physically demanding.

Researchers must manage long hours of intense concentration without feeling overwhelmed.

A healthy lifestyle and positive mindset set the foundation for successful AI research.

Researchers can ensure that they are physically and mentally at their best by prioritizing physical activity, healthy eating habits, and stress-reducing activities such as meditation or yoga.

Strategies for Maintaining Mental and Physical Health

Maintaining good mental and physical health requires intentional effort by researchers. Some strategies that can help include:

Regular exercise: Exercise has been shown to improve mood, reduce stress levels, increase energy levels, and improve cognitive function.

Healthy eating habits: A balanced diet rich in fruits, vegetables, whole grains, lean proteins, and healthy fats can provide the nutrients needed for optimal brain function.

Stress management techniques: Meditation or deep breathing exercises can help reduce stress levels.

Time management skills: Effective time management skills can help prevent burnout by ensuring researchers have adequate time for stimulating activities outside of work.

Social support networks: A supportive network of colleagues or friends can provide emotional support during difficult times.

By implementing these strategies into their daily routines, researchers can prioritize their mental and physical health while still achieving success in their work.

Showcase of Top AI Research Institutions and Individuals

Artificial Intelligence (AI) research is a rapidly growing field that has the potential to revolutionize various industries.

From natural language processing to robotics, AI research covers many areas. This article will showcase top AI research institutions and individuals leading this exciting field.

Leading AI Researchers

One of the most respected names in AI research is Andrew Ng.

He is an adjunct professor at Stanford University and co-founder of Google Brain, which focuses on deep learning algorithms for machine perception.

He has also contributed significantly to Coursera’s online machine learning and deep learning courses.

Another prominent figure in the field is Yoshua Bengio, a professor at the University of Montreal and a pioneer in deep learning models for natural language processing.

He co-founded Element AI, an artificial intelligence software company that provides business solutions.

Geoffrey Hinton is another notable researcher who helped develop backpropagation, a widely used algorithm for training neural networks.

He works as a distinguished researcher at Google Brain and a professor at the University of Toronto.

Top AI Research Companies

Stanford University and Caltech are two renowned institutions with top-notch AI research programs. Stanford’s Artificial Intelligence Laboratory focuses on developing intelligent systems that can perceive, reason, learn, and act autonomously.

The university collaborates with tech giants like Microsoft Research and IBM Research to advance their research efforts.

Caltech’s Center for Autonomous Systems & Technologies (CAST) conducts cutting-edge research in robotics, computer vision, control systems, and machine learning algorithms.

Their interdisciplinary approach brings together experts from different fields to solve complex problems related to autonomous systems.

Google Brain is another leading player in the field of AI research, with its focus on deep learning models for image recognition, speech recognition, natural language processing, and more.

They have significantly contributed to open-source projects such as TensorFlow, a popular machine-learning framework.

Visualization and AI Research

Visualization is an essential aspect of AI research that enables researchers to gain insights into complex data.

It allows them to identify patterns, anomalies, and relationships that may not be apparent through raw data analysis.

Data visualization techniques such as heatmaps, scatter plots, and network graphs are commonly used in AI research.

For instance, visualizing neural networks can help researchers understand how the network processes information and identify potential errors or inefficiencies.

Similarly, visualizing large datasets can help researchers identify trends and patterns that may not be evident through statistical analysis alone.

Neural Networks and Deep Learning Models

Neural networks are a fundamental component of AI research that mimics the human brain’s structure and function. They consist of interconnected nodes or neurons that process information and learn from experience.

Deep learning models are a subset of neural networks that use multiple layers to extract high-level features from input data.

These models have revolutionized several areas of AI research, such as computer vision, natural language processing, speech recognition, and more.

They have achieved state-of-the-art performance in various tasks such as image classification, object detection, language translation, etc.

Designing AI Systems

AI systems are designed to perform specific tasks efficiently without human intervention. They can be trained using supervised or unsupervised learning algorithms to improve accuracy.

The design process involves clearly defining the problem statement, collecting relevant data sets for teaching the model(s), selecting appropriate algorithms for the task and test, and sting them thoroughly before deploying them in real-world scenarios.

AI systems have numerous applications across different industries, such as healthcare (diagnosis), finance (fraud detection), manufacturing (quality control), etc., making them one of the most sought-after technologies today.

Cloud-Native Open-Source Stack for Accelerating Foundation Model Innovation: Addressing Climate Change with AI Research

Scalable Platforms for AI Research

The demand for artificial intelligence (AI) research has been increasing.

With the growing need to address climate change, researchers are looking for ways to scale their platforms and accelerate innovation.

One solution is through cloud-native open-source stacks that provide a scalable and flexible platform for AI research.

Cloud-native open-source stacks offer an efficient way of building and deploying applications in the cloud. These stacks consist of tools, frameworks, and services enabling developers to build scalable applications quickly.

With these tools, researchers can quickly develop and deploy models at scale, reducing development time and costs.

One example of a cloud-native open-source stack is Intel’s oneAPI tools.

These tools provide a unified programming model that enables developers to write code once and run it across different architectures.

IBM’s Watson natural language processing library uses Intel’s one API tool to accelerate its performance by up to 2x.

Crowdsourcing for AI Research

Crowdsourcing is another approach that researchers can use to scale their platforms and accelerate innovation.

Crowdsourcing involves outsourcing tasks or problems to a large group of people through an open call.

In AI research, crowdsourcing can gather data, train models, or validate results.

One example of crowdsourcing in AI research is Kaggle, a platform where data scientists compete in machine learning competitions.

These competitions involve solving real-world problems using machine-learning techniques. The winners receive cash prizes and recognition from industry leaders.

Another example is Foldit, an online game where players solve protein-folding puzzles using intuition and creativity.

This game has helped researchers solve complex protein-folding problems more efficiently than traditional algorithms.

Addressing Climate Change with AI Research

AI research can address some of the world’s most pressing challenges, including climate change.

AI can be used to optimize energy consumption, reduce greenhouse gas emissions, and improve resource efficiency.

For example, AI can optimize buildings’ energy consumption by predicting occupancy patterns and adjusting heating and cooling systems accordingly.

This can reduce energy waste and lower carbon emissions.

AI can also monitor and predict weather patterns, enabling better disaster preparedness and response.

AI can help optimize supply chains by reducing waste and improving efficiency.

The Future of AI Research

As an AI researcher, you are at the forefront of a rapidly evolving field that is changing the world as we know it.

In this conclusion section, we will summarize some key insights and lessons learned from experienced AI researchers, showcase top institutions and individuals in the field, and discuss the future of AI research.

The Difference between Artificial Intelligence and Human Intelligence

One of the most important lessons learned from experienced AI researchers is that there is a fundamental difference between artificial intelligence and human intelligence.

While machines can be trained to perform specific tasks with incredible accuracy, they lack the creativity, intuition, and empathy essential for many human endeavors.

As such, it is crucial to approach AI research with a clear understanding of its limitations.

Insights and Lessons Learned from Experienced AI Researchers

Experienced AI researchers have also emphasized the importance of reading widely to stay up-to-date with developments in the field.

This includes academic papers, conversations with other researchers, videos of talks and conferences, and even popular science books.

By staying informed about new techniques and technologies, you can ensure that your work remains relevant and impactful.

Reading Habits for AI Researchers: Conversations, Videos, Papers, and Conference Talks

In addition to reading widely, AI researchers need to care for their mental and physical health.

Burnout is generally a common problem in academia but can be particularly acute in AI research, where progress can be slow or unpredictable.

By prioritizing self-care activities like exercise or spending time with loved ones outside of work hours, you can maintain your motivation over the long term.

Mental and Physical Health: Prerequisites for AI Research Motivations

Google’s DeepMind has made significant breakthroughs in machine learning algorithms;

OpenAI has been at the forefront of research into natural language processing and robotics, and the Allen Institute for AI has significantly contributed to computer vision.

Meanwhile, individuals like Fei-Fei Li, Yoshua Bengio, and Geoffrey Hinton have all made significant contributions to the field.

Cloud-Native Open-Source Stack for Accelerating Foundation Model Innovation: Addressing Climate Change with AI Research

Looking toward the future, there is no doubt that AI research will continue to be a critical area of study for years to come.

As we confront global challenges like climate change, disease outbreaks, and economic inequality, AI will play an increasingly important role in helping us understand complex systems and make informed decisions.

By staying curious, knowledgeable, and committed to your work as an AI researcher, you can help shape this exciting future.

Conclusion

In conclusion, we hope this overview of AI research’s future has been informative and inspiring.

Remember that by prioritizing your mental and physical health, staying up-to-date with developments in the field through reading widely, learning from experienced researchers’ insights and lessons learned, and showcasing top institutions’ examples worth highlighting, you can make a meaningful contribution to this rapidly evolving field.

AI research has rapidly evolved over the years and has significantly advanced in various fields, such as machine learning, natural language processing, and computer vision.

However, ethical considerations regarding bias, privacy, and job displacement must also be addressed.

The future of AI research holds immense potential for applications in emerging technologies and economic growth, but it must ensure responsible use.

Keep pushing boundaries!

FAQ

AI research refers to the scientific study of artificial intelligence and its applications in various fields, including computer science, engineering, mathematics, cognitive science, and psychology. This research is aimed at developing algorithms and models that can enable machines to perform intelligent tasks such as learning from data or recognizing patterns.

AI research has evolved significantly over the past few decades due to the increased availability of data and computational resources. Earlier approaches focused on rule-based systems that relied on human experts to define rules for decision-making. However, recent advances in machine learning have enabled computers to learn from vast amounts of data without explicit programming.

Some current challenges faced by AI researchers include improving the interpretability and explainability of machine learning models, addressing ethical concerns regarding algorithmic biases and privacy issues, ensuring robustness against adversarial attacks, and handling uncertainty in real-world settings.

AI research has numerous potential benefits for society such as improved healthcare outcomes through disease diagnosis or drug discovery, better resource allocation through optimized supply chain management or logistics planning systems, enhanced productivity with automated processes across industries like manufacturing & agriculture - all while reducing wastage & increasing cost-efficiency)!

Source URLs

https://www.valuer.ai/blog/75-facts-about-artificial-intelligence

https://connect.comptia.org/blog/artificial-intelligence-statistics-facts

https://medium.com/@ashoktamhankar/13-interesting-facts-about-ai-51792ece14f0

https://www.zippia.com/advice/artificial-intelligence-statistics/

https://techjury.net/blog/ai-statistics/

https://www.sprintzeal.com/blog/facts-about-artificial-intelligence